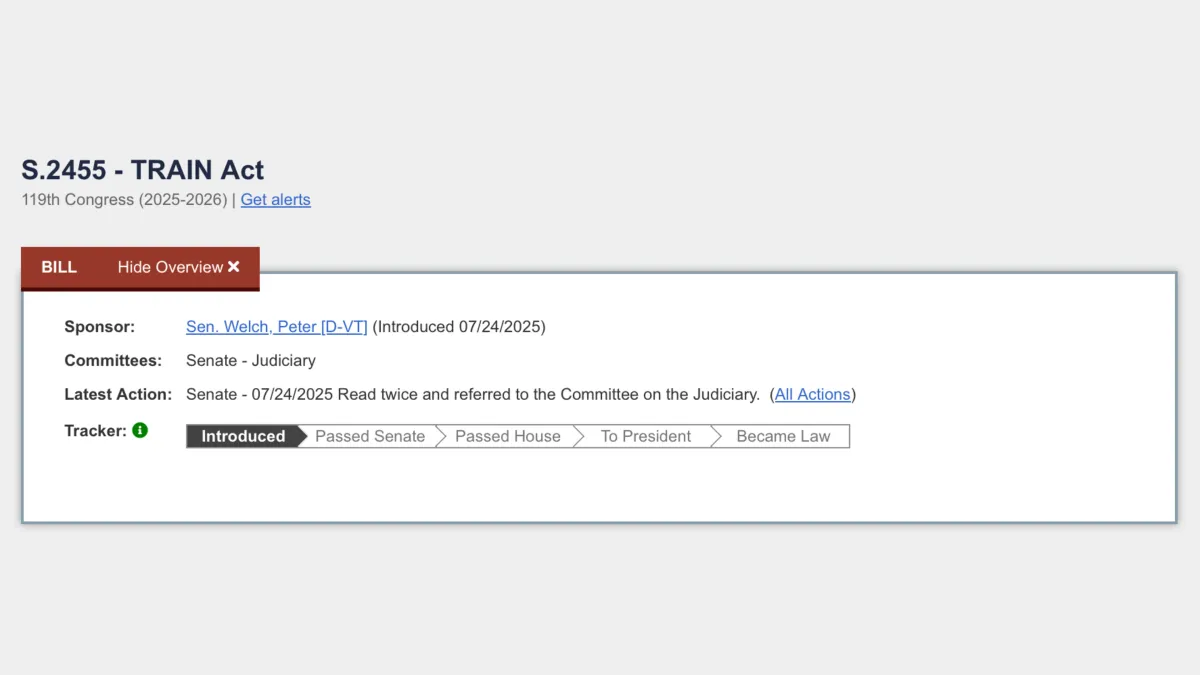

Vermont Senator Peter Welch introduced the Transparency and Responsibility for Artificial Intelligence Networks Act (TRAIN Act) on July 24, 2025, establishing a new administrative subpoena mechanism allowing copyright owners to determine which of their protected works were used to train artificial intelligence models. The bipartisan legislation, designated S.2455, received immediate support from Republican Senators Marsha Blackburn of Tennessee and Josh Hawley of Missouri, alongside Democratic Senator Adam Schiff of California.

Subscribe the PPC Land newsletter ✉️ for similar stories like this one. Receive the news every day in your inbox. Free of ads. 10 USD per year.

According to the bill text submitted to the Senate Judiciary Committee, the legislation creates a streamlined process for copyright holders to request district court clerks issue subpoenas to AI developers. These subpoenas would compel disclosure of training materials or records sufficient to identify copyrighted works with certainty. The measure specifically targets generative artificial intelligence models that emulate input data structure to generate synthetic content including images, videos, audio, and text.

The procedural framework outlined in Section 2 of the bill requires copyright owners to demonstrate subjective good faith belief that developers used their protected works for training purposes. Requesters must file proposed subpoenas alongside sworn declarations stating their purpose involves protecting copyright holder rights rather than broader discovery efforts. The legislation explicitly limits subpoenas to works owned or controlled by the requesting party, preventing fishing expeditions across unrelated copyrighted materials.

Technical definitions within the 8-page bill establish precise parameters for covered technologies. According to Section 514(a)(2), artificial intelligence models encompass information system components implementing AI technology through computational, statistical, or machine-learning techniques producing outputs from given inputs. The legislation defines developers as entities designing, coding, producing, owning, or substantially modifying generative AI models, while excluding noncommercial end users from coverage.

Training material specifications under subsection (6) include individual works or components used for AI model training purposes, encompassing text, images, audio, and other expressive materials alongside descriptive annotations. The bill establishes substantial modification standards requiring actions leading to new versions, releases, or updates materially changing model functionality or performance through retraining or fine-tuning processes.

Enforcement mechanisms within the proposed law create rebuttable presumptions against non-compliant developers. According to Section 514(i), failure to comply with issued subpoenas establishes presumptive evidence that developers copied copyrighted works. The legislation incorporates Federal Rules of Civil Procedure governing subpoena issuance, service, and enforcement while maintaining expeditious disclosure requirements for receiving developers.

Confidentiality provisions under subsection (g) restrict copyright owners from disclosing received copies or records without proper authorization or consent. The bill includes sanctions for bad faith subpoena requests, applying Rule 11(c) of Federal Rules of Civil Procedure to impose penalties on requesters making frivolous claims. Courts may impose sanctions upon motion by subpoena recipients demonstrating bad faith requests.

The timing proves significant as multiple high-profile copyright lawsuits challenge AI companies' training practices across federal courts. Recent cases including Kadrey v. Meta Platforms and ongoing litigation against Anthropic PBC have produced mixed rulings on fair use applications to AI training scenarios. The June Meta ruling found fair use in specific circumstances, while the Anthropic case distinguished between legitimate training uses and pirated content acquisition.

Industry response to pending copyright legislation reflects broader tensions surrounding AI development and content creator rights. Mediavine's August petition demanding immediate Copyright Office action represents growing publisher frustration with current regulatory approaches. The ad management company, representing over 17,000 digital publishers, explicitly rejected Copyright Office recommendations for market self-regulation.

Legal precedent from recent court decisions demonstrates judicial willingness to examine AI training practices under existing copyright frameworks. Federal judges have consistently applied four-factor fair use analysis while distinguishing between transformative training uses and direct copyright infringement. However, courts have emphasized case-specific limitations, preventing broad applications across different AI companies and training methodologies.

The Copyright Office's comprehensive examination of AI-copyright intersections provides crucial context for legislative action. Their May 2025 generative AI training report addressed contentious fair use questions while February economic analysis established frameworks for evaluating AI technology impacts on traditional copyright incentives and market dynamics.

Academic research raises important considerations about copyright law's role in AI regulation. Professor Carys Craig's Chicago-Kent Law Review paper warned against expanding copyright restrictions on AI training data, arguing such measures could create cost-prohibitive barriers favoring powerful market players while limiting beneficial AI development across healthcare and scientific research sectors.

International approaches demonstrate varying strategies for balancing copyright protection with AI innovation needs. Research published in the Emory Law Journal revealed surprising convergence among major jurisdictions including the United States, European Union, Japan, and China toward copyright exceptions facilitating AI development. Countries increasingly embrace exceptions despite varying legal traditions and economic conditions.

The TRAIN Act's administrative subpoena mechanism represents a middle-ground approach between expansive copyright enforcement and unrestricted AI development. Unlike comprehensive licensing requirements or broad fair use exemptions, the legislation provides targeted discovery tools enabling copyright holders to determine usage without predetermining infringement outcomes. This procedural focus avoids substantive copyright law modifications while addressing information asymmetries between rights holders and AI developers.

Implementation challenges may arise from technical complexities surrounding AI training dataset documentation. Many AI companies have historically maintained limited records of specific copyrighted works within massive training corpora. The legislation's expeditious disclosure requirements could necessitate significant changes to current data management practices across the AI industry.

Economic implications extend beyond individual copyright disputes to broader questions about AI development costs and market competition. Venture capital firms including Andreessen Horowitz have argued that changing current fair use interpretations could disrupt settled expectations and potentially harm United States competitiveness against nations with more permissive AI training policies.

The bipartisan support signals potential legislative momentum despite traditional partisan divisions on technology regulation issues. Senator Welch's leadership on AI transparency measures aligns with broader Democratic concerns about technology company accountability, while Republican cosponsors focus on intellectual property protection and market-based solutions to AI governance challenges.

Subscribe the PPC Land newsletter ✉️ for similar stories like this one. Receive the news every day in your inbox. Free of ads. 10 USD per year.

Timeline

- March 2024: U.S. Copyright Office announces multi-section AI and copyright initiative

- July 2024: Copyright Office releases Part 1 of AI report addressing digital replicas

- October 2024: Global study reveals copyright law convergence on AI training

- January 2025: Copyright Office publishes Part 2 clarifying AI-generated content rules

- February 2025: Copyright Office explores economic effects of AI on creative works

- May 2025: Copyright Office releases major AI training report

- June 2025: Court rules Meta used copyrighted books legally for AI training

- July 2025: Court finds fair use in AI training but rejects piracy defense

- July 24, 2025: Senator Welch introduces TRAIN Act (S.2455)

- August 2025: Mediavine launches petition demanding AI copyright protections

PPC Land explains

Copyright owners: Legal entities holding exclusive rights to original creative works including authors, publishers, musicians, and content creators. Under the TRAIN Act, copyright owners gain new tools to investigate potential unauthorized use of their protected materials in AI training datasets. The legislation specifically requires copyright owners to demonstrate subjective good faith belief that their works were used for training purposes before requesting subpoenas.

Artificial intelligence models: Information system components implementing AI technology through computational, statistical, or machine-learning techniques to produce outputs from given inputs. The TRAIN Act focuses specifically on generative AI models that emulate input data structure to create synthetic content including text, images, videos, and audio. These models require massive datasets for training purposes, often incorporating copyrighted materials without explicit permission.

Training datasets: Collections of data used to teach AI models how to generate outputs by learning patterns and relationships within the information. Training datasets for generative AI often contain millions of copyrighted works including books, articles, images, and other creative materials. The TRAIN Act seeks to provide transparency about which specific copyrighted works appear in these datasets.

Subpoenas: Legal orders compelling individuals or organizations to provide testimony or documents in legal proceedings. The TRAIN Act creates administrative subpoenas issued by district court clerks rather than requiring full litigation. These subpoenas would compel AI developers to disclose training materials or records identifying copyrighted works with certainty.

Fair use: Copyright law doctrine allowing limited use of protected works without permission for purposes including criticism, comment, news reporting, teaching, scholarship, or research. Recent court decisions have applied fair use analysis to AI training, with mixed results depending on specific circumstances. The TRAIN Act does not modify fair use standards but provides discovery mechanisms to determine whether unauthorized copying occurred.

Generative AI: Artificial intelligence systems designed to create new content by learning patterns from training data and generating outputs that emulate the characteristics of input materials. Generative AI models can produce text, images, audio, videos, and other digital content. The technology raises complex copyright questions because it requires exposure to vast amounts of existing creative works during training.

AI developers: Entities that design, code, produce, own, or substantially modify generative AI models for commercial or research purposes. Under the TRAIN Act, developers include companies and organizations engaged in training dataset curation or supervision. The legislation excludes noncommercial end users from developer definitions while focusing on entities with substantial control over AI model development.

Senate Judiciary Committee: Congressional committee with jurisdiction over federal courts, constitutional amendments, immigration, patents, copyrights, and other legal matters. The TRAIN Act was referred to this committee following introduction by Senator Welch. The committee will examine the legislation through hearings and markup processes before potential floor consideration.

Copyright infringement: Unauthorized use of copyrighted material that violates exclusive rights granted to copyright owners including reproduction, distribution, public performance, and creation of derivative works. AI training potentially involves reproduction of copyrighted works during dataset assembly and model training processes. The TRAIN Act provides tools for investigating potential infringement without predetermining legal outcomes.

Bipartisan legislation: Congressional measures receiving support from members of both major political parties, indicating broader consensus on policy approaches. The TRAIN Act's bipartisan sponsorship suggests potential legislative viability despite traditional partisan divisions on technology regulation. Republican and Democratic cosponsors bring different perspectives on intellectual property protection and AI governance to the legislation.

Summary

Who: Senator Peter Welch (D-VT) sponsored the TRAIN Act with bipartisan cosponsors including Senators Marsha Blackburn (R-TN), Josh Hawley (R-MO), and Adam Schiff (D-CA). The legislation affects copyright owners, AI developers, and federal district courts.

What: The Transparency and Responsibility for Artificial Intelligence Networks Act establishes an administrative subpoena process allowing copyright owners to identify their protected works used in AI training datasets. The bill creates streamlined procedures for obtaining disclosure from AI developers through district court clerks.

When: The legislation was introduced on July 24, 2025, and referred to the Senate Judiciary Committee. The bill would take effect upon enactment if passed by Congress and signed into law.

Where: The federal legislation applies across United States district courts and affects AI developers operating within U.S. jurisdiction. The bill was introduced in the U.S. Senate as part of the 119th Congress.

Why: The legislation addresses information asymmetries between copyright holders and AI companies regarding training dataset contents. Growing litigation and industry disputes over AI training practices prompted lawmakers to provide copyright owners with discovery tools to determine unauthorized use of their protected works.