YouTube acknowledged on August 20, 2025, that it has been using artificial intelligence to modify videos without informing content creators or obtaining their consent. The revelation came after months of creator complaints about strange visual artifacts appearing in their content, prompting an official response from the platform's liaison team.

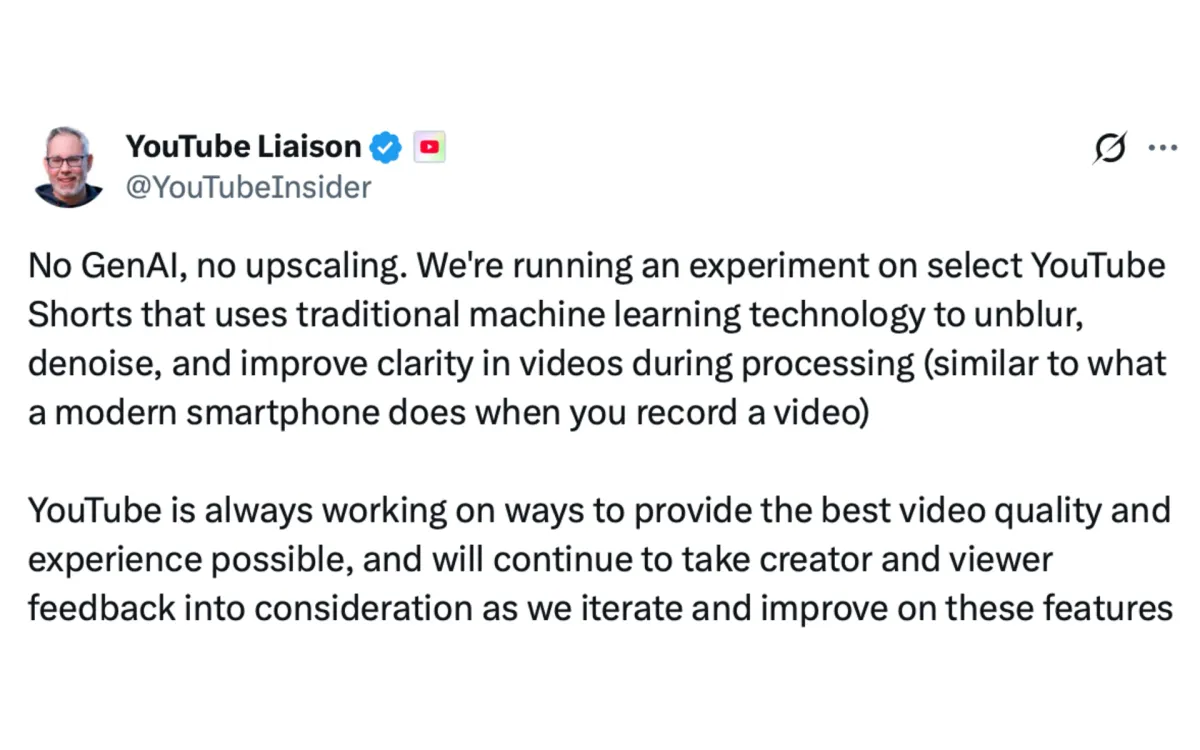

According to YouTube's head of editorial and creator liaison Rene Ritchie, the company has been "running an experiment on select YouTube Shorts that uses traditional machine learning technology to unblur, denoise, and improve clarity in videos during processing." The modifications have been affecting video content since at least June 2025, based on social media complaints dating back to that period.

Subscribe PPC Land newsletter ✉️ for similar stories like this one. Receive the news every day in your inbox. Free of ads. 10 USD per year.

The AI enhancement system processes videos automatically during upload, making subtle changes that creators described as unwelcome. Rick Beato, a music YouTube creator with over five million subscribers, noticed his appearance looked unusual in recent videos. "I was like 'man, my hair looks strange'. And the closer I looked it almost seemed like I was wearing makeup," Beato said in a BBC interview published August 24, 2025.

Rhett Shull, another music YouTuber who investigated the issue, posted a video on the subject that accumulated over 500,000 views. "If I wanted this terrible over-sharpening I would have done it myself. But the bigger thing is it looks AI-generated," Shull explained. "I think that deeply misrepresents me and what I do and my voice on the internet."

The technical implementation uses machine learning algorithms to modify various visual elements during video processing. According to creator reports, the system sharpens certain areas while smoothing others, defines wrinkles in clothing more prominently, and can cause distortions in body parts like ears. These modifications occur without any notification or option for creators to opt out of the enhancement process.

YouTube's response emphasized the distinction between different AI technologies. Ritchie stated the platform uses "traditional machine learning" rather than generative AI, drawing a line between enhancement algorithms and content creation systems. However, according to Samuel Woolley, the Dietrich chair of disinformation studies at the University of Pittsburgh, this represents a "misdirection" since machine learning constitutes a subfield of artificial intelligence.

The timing of these revelations coincides with broader industry discussions about AI transparency in content modification. Earlier in 2025, YouTube implemented mandatory disclosure requirements for AI-generated content, effective May 25, requiring creators to label synthetically altered material. The company also clarified its monetization policies regarding AI content in July 2025.

Content creators have expressed particular concern about the lack of consent in the modification process. Unlike smartphone cameras that offer users control over AI enhancement features, YouTube's system operates without creator knowledge or approval. The modifications potentially impact how audiences perceive creator content, raising questions about artistic integrity and authenticity.

The broader implications extend beyond individual creator concerns. According to Woolley, the practice represents how "AI is increasingly a medium that defines our lives and realities." The professor argues that when companies modify content without disclosure, it risks "blurring the lines of what people can trust online."

Some YouTubers have reported their content being mistaken for AI-generated material due to the visual artifacts introduced by the enhancement system. This creates additional challenges for creators who must now address audience questions about whether their content is authentic, despite the modifications being applied without their knowledge.

The technical implementation appears limited to YouTube Shorts, the platform's short-form video feature designed to compete with TikTok. YouTube has not disclosed how many creators or videos have been affected by the experimental enhancement system, describing it only as affecting "select" content.

YouTube's approach differs significantly from other platform modifications. Traditional video compression and quality adjustments maintain the original content's essential character, while the AI enhancement system actively modifies visual elements to meet algorithmic preferences for image quality.

Buy ads on PPC Land. PPC Land has standard and native ad formats via major DSPs and ad platforms like Google Ads. Via an auction CPM, you can reach industry professionals.

The controversy highlights growing tensions between platform optimization and creator autonomy. While YouTube positions the enhancements as quality improvements similar to smartphone camera processing, creators argue the modifications fundamentally alter their artistic intent without permission.

Industry experts note this development occurs amid increasing scrutiny of AI implementation in content platforms. Recent months have seen similar controversies involving Samsung's AI-enhanced moon photography and Netflix's apparent AI remastering of 1980s television content, raising broader questions about transparency in automated content modification.

The marketing community faces significant implications as nearly 90% of advertisers plan to use AI for video advertisement creation by 2026, according to an Interactive Advertising Bureau report from July 2025. YouTube's undisclosed modifications could affect how branded content appears to audiences, potentially impacting campaign effectiveness and brand representation.

Content authenticity has become increasingly important as artificial intelligence capabilities advance. The platform's decision to implement enhancements without disclosure contradicts growing industry emphasis on transparency, particularly given YouTube's own requirements for creators to label AI-generated content.

YouTube has not responded to questions about whether creators will receive options to control AI modifications of their content. The platform stated it will "continue to take creator and viewer feedback into consideration as we iterate and improve on these features," but provided no timeline for potential policy changes.

The revelation underscores the evolving relationship between AI technology and creative content. As machine learning systems become more sophisticated, platforms face decisions about implementation transparency and creator control over automated modifications.

Some creators remain supportive of YouTube's experimentation approach despite the controversy. Beato, while initially concerned about the modifications, acknowledged that "YouTube is constantly working on new tools and experimenting with stuff. They're a best-in-class company, I've got nothing but good things to say. YouTube changed my life."

The debate reflects broader questions about AI's role in mediating reality. According to Jill Walker Rettberg, a professor at the Center for Digital Narrative at the University of Bergen, the situation raises fundamental questions about authenticity. "With algorithms and AI, what does this do to our relationship with reality?" Rettberg noted.

Current industry developments suggest this controversy may influence future platform policies. Google's broader AI integration across advertising platforms and increasing automation in content delivery systems indicate that similar transparency questions will likely emerge across multiple platforms.

The YouTube situation demonstrates the challenges platforms face in balancing technological innovation with creator expectations and user trust. As AI capabilities continue advancing, the industry must address fundamental questions about consent, transparency, and the preservation of creative authenticity in an increasingly automated content ecosystem.

Subscribe PPC Land newsletter ✉️ for similar stories like this one. Receive the news every day in your inbox. Free of ads. 10 USD per year.

Timeline

- June 2025 - First creator complaints about unusual video artifacts appear on social media

- August 20, 2025 - YouTube officially confirms AI enhancement experiment through Rene Ritchie's social media response

- August 24, 2025 - BBC publishes comprehensive investigation featuring affected creator interviews

- May 25, 2025 - YouTube introduces mandatory disclosure for AI content

- July 15, 2025 - YouTube clarifies "inauthentic content" policy changes

- July 16, 2025 - IAB report reveals 86% of buyers using AI for video ads

- March 18, 2024 - YouTube unveils transparency tools for AI-altered content

PPC Land explains

Artificial Intelligence (AI): Advanced computational systems that simulate human intelligence processes, including learning, reasoning, and pattern recognition. In YouTube's case, AI refers to machine learning algorithms that automatically analyze and modify video content during processing. The technology operates without human intervention, making decisions about visual enhancements based on predetermined parameters and training data from millions of video samples.

Machine Learning: A subset of artificial intelligence that enables systems to automatically learn and improve from experience without explicit programming. YouTube's enhancement system uses machine learning to identify visual elements requiring modification, such as blurry areas or noise patterns. The algorithms continuously refine their processing capabilities based on video analysis, though creators cannot influence these automated decisions.

YouTube Shorts: The platform's short-form video feature launched to compete with TikTok, supporting videos up to 60 seconds in length. Shorts represents a significant portion of YouTube's content strategy, with the AI enhancement experiment currently limited to this format. The feature processes millions of uploads daily, making automated quality improvements technically necessary but raising questions about creator consent and content authenticity.

Content Enhancement: The automated process of improving video quality through algorithmic modifications including denoising, sharpening, and clarity adjustments. YouTube's enhancement system operates during video upload processing, applying changes before content reaches viewers. These modifications can alter the original artistic intent, creating tension between technical quality improvements and creative authenticity that creators specifically intended.

Creator Consent: The principle that content creators should have control over modifications made to their original work. YouTube's AI enhancement system operates without explicit creator permission, raising ethical questions about platform authority over user-generated content. This lack of consent contrasts with smartphone camera features that allow users to enable or disable AI enhancements before recording.

Visual Artifacts: Unintended visual distortions or anomalies introduced by AI processing systems that can make content appear artificially generated. Creators reported various artifacts including unusual sharpening effects, skin texture modifications, and facial feature distortions. These artifacts can undermine creator credibility when audiences mistake enhanced content for AI-generated material, affecting creator-audience trust relationships.

Content Authenticity: The principle that digital content should accurately represent the creator's original intent without undisclosed modifications. YouTube's enhancement system challenges authenticity by altering visual elements without creator knowledge or audience notification. Authenticity concerns become particularly important as AI-generated content becomes more prevalent, requiring clear distinctions between original and modified material.

Platform Transparency: The obligation for technology companies to clearly communicate their content modification practices to users. YouTube's undisclosed AI enhancement experiment violated transparency principles by implementing changes without creator notification. Transparency becomes crucial as platforms increasingly use AI systems that can fundamentally alter user content, affecting both creator rights and audience expectations.

Monetization Policies: Rules governing how creators can earn revenue from their content on YouTube, including guidelines about AI usage and content authenticity. Recent policy updates require disclosure of synthetic content while maintaining eligibility for creators using AI tools appropriately. These policies attempt to balance innovation with authenticity requirements, though enforcement complexity increases as AI capabilities advance.

Digital Marketing: The practice of promoting products and services through online platforms, increasingly incorporating AI-generated content and automated optimization. YouTube's AI modifications affect marketing campaigns by potentially altering how branded content appears to audiences. Marketing professionals must now consider how platform-level AI enhancements might impact campaign messaging and brand representation, adding complexity to content strategy development.

Five Ws Summary

Who: YouTube, the Google-owned video platform, along with content creators Rick Beato and Rhett Shull who first reported the modifications. YouTube's head of editorial Rene Ritchie provided the official response.

What: YouTube has been using artificial intelligence to automatically enhance videos without creator consent, applying modifications that unblur, denoise, and improve clarity. The changes create visual artifacts that some creators describe as making their content appear AI-generated.

When: The modifications have been occurring since at least June 2025, with YouTube confirming the practice on August 20, 2025, after months of creator complaints.

Where: The AI enhancements affect YouTube Shorts, the platform's short-form video feature, with modifications applied during the video processing stage after upload.

Why: According to YouTube, the enhancements aim to improve video quality and viewer experience, similar to smartphone camera processing. However, creators argue the modifications occur without consent and potentially damage their artistic integrity and audience trust.