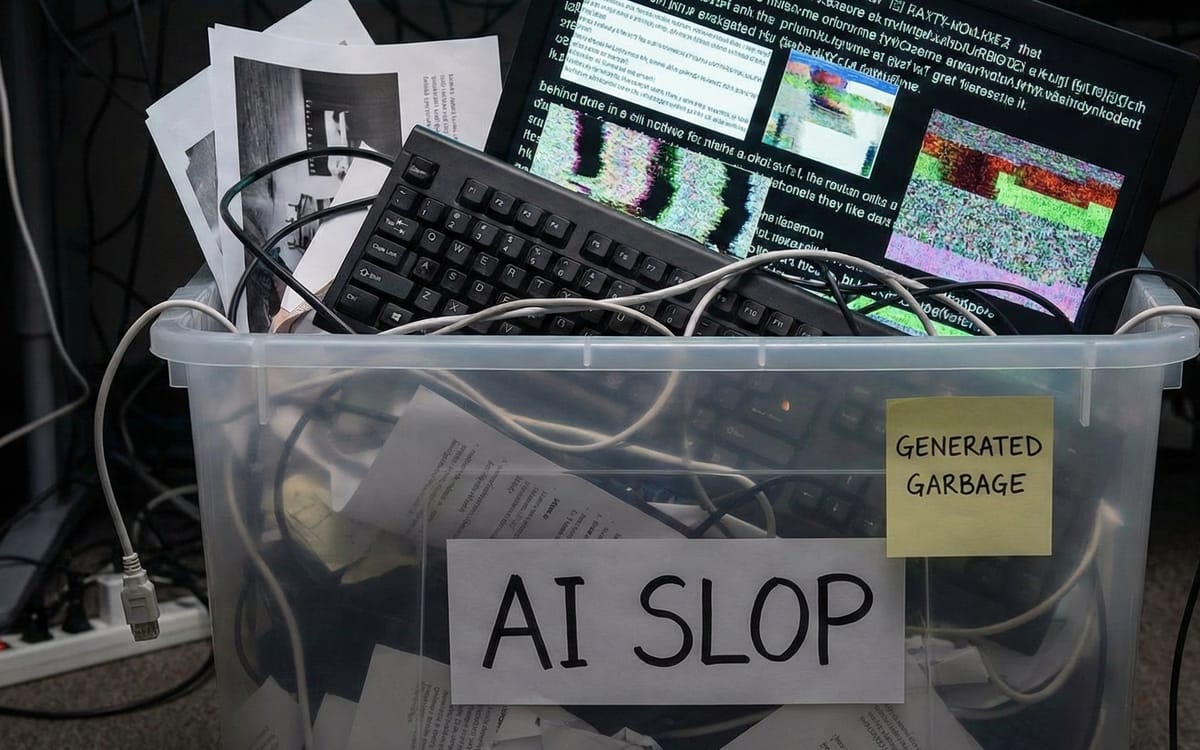

The term "AI slop" entered mainstream vocabulary throughout 2024 as a descriptor for low-quality digital content generated by artificial intelligence tools, typically produced with minimal human oversight or quality control. Merriam-Webster recognized the cultural significance of the phenomenon by selecting "slop" as its 2025 Word of the Year in December 2025.

Free weekly newsletter

Your go-to source for digital marketing news.

No spam. Unsubscribe anytime.

AI slop refers to low-quality, mass-produced content created by artificial intelligence systems with minimal human oversight or quality control. The term emerged from online communities to describe AI-generated material that lacks authenticity, contains factual errors, or appears formulaic. AI slop typically floods digital platforms with high-volume, low-value content designed primarily to generate advertising revenue rather than provide genuine value to readers or viewers.

British computer programmer Simon Willison is credited with championing the term "slop" in mainstream discourse, having used it on his personal blog in May 2024. However, he acknowledged the term was in use long before he began advocating for its adoption. The term gained increased popularity during the second quarter of 2024 in part because of Google's use of its Gemini AI model to generate responses to search queries.

The term carries a pejorative connotation similar to spam, reflecting widespread frustration with content that prioritizes volume over substance. AI slop is characterized as "digital clutter" and "filler content prioritizing speed and quantity over substance and quality."

Core characteristics that identify AI slop

AI slop manifests through several distinctive features that distinguish it from legitimate AI-assisted content. Superficiality represents its most prominent characteristic, with content appearing informative at a glance but lacking meaningful engagement or novel ideas. This trait stems from generative models producing outputs optimized for volume rather than depth.

Repetition marks another hallmark, evident in formulaic structures, recycled phrasing, and redundant ideas that prioritize filler over progression. Factual inaccuracies, including hallucinations or outright errors, further undermine reliability, as the content frequently deviates from verifiable truths without human oversight to correct deviations.

Unlike high-quality AI outputs refined through editing and contextual integration, AI slop typically emerges from uncurated, low-effort prompts, distinguishing it by an absence of originality, human-like insight, or adaptive creativity. These flaws appear across formats, such as generic blog posts that mimic journalistic styles without investigative rigor, stock-like images blending clichés into unremarkable visuals, or scripted videos employing neutral tones and predictable narratives that fail to evoke genuine interest.

Jonathan Gilmore, a philosophy professor at the City University of New York, describes the material as having an "incredibly banal, realistic style" which is easy for the viewer to process, according to reporting in the documents.

Buy ads on PPC Land. PPC Land has standard and native ad formats via major DSPs and ad platforms like Google Ads. Via an auction CPM, you can reach industry professionals.

Types of AI slop across platforms

AI slop proliferates across multiple content formats and distribution channels. On social media, AI image and video slop have become particularly prevalent on Facebook and TikTok, where revenue-generating opportunities incentivize individuals from developing countries to create images that appeal to audiences in the United States, which attract higher advertising rates.

AI-generated images of plants and plant care misinformation have proliferated on social media platforms. Online retailers have used AI-generated images of flowers to sell seeds of plants that do not actually exist. Many online houseplant communities have banned AI generated content but struggle to moderate large volumes of content posted by bots.

Facebook spammers have been reported as AI-generating images of Holocaust victims with fake stories. The Auschwitz Memorial museum called the images a "dangerous distortion." History-focused Facebook groups have been inundated with AI-generated "historical" photos that bear no relationship to actual historical events.

On publishing platforms, online booksellers and library vendors now feature many titles written by AI without curation into collections by librarians. The digital media provider Hoopla, which supplies libraries with ebooks and downloadable content, has generative AI books with fictional authors and dubious quality, which cost libraries money when checked out by unsuspecting patrons.

Scale of the problem

Research published by Kapwing on November 28, 2024, revealed the extent of AI slop's penetration into mainstream platforms. Analysis of 500 consecutive YouTube Shorts presented to new users found that 104 videos (21%) were AI-generated slop, defined as careless content generated using automatic applications and distributed to farm views.

The Guardian's analysis of YouTube's July 2024 figures revealed that nearly one in ten of the fastest-growing YouTube channels globally showed AI-generated content exclusively. EMarketer forecasts that as much as 90% of web content may be AI-generated by 2026, with some artificial intelligence-driven sites producing up to 1,200 articles daily to maximize ad revenue through sheer volume.

Current web supply patterns demonstrate the scale of automated content generation. Analysis reveals that 41% of available web supply was published this week, 26% of available web supply was published today, and 6% represents content published this hour, according to research from Integral Ad Science.

Economic incentives driving production

Platform monetization programs create direct financial rewards for content creation based on engagement metrics, making AI-generated viral content economically attractive despite questionable quality or authenticity. TikTok's Creator Fund offers payments between $0.02 and $0.04 per 1,000 views for creators in the United States, United Kingdom, Germany, Japan, South Korea, France, and Brazil.

While these rates may appear modest, creators have discovered that AI tools can generate hundreds of videos with minimal time investment, potentially scaling earnings significantly. Top AI slop channels generate estimated annual revenues between $4 million and $4.25 million through advertising income alone, creating powerful motivation for content creators to flood platforms with machine-generated videos.

The journalist Jason Koebler speculated that the bizarre nature of some of the content may be due to creators using Hindi, Urdu, and Vietnamese prompts — languages which are underrepresented in the model's training data — or using erratic speech-to-text methods to translate their intentions into English, according to reporting in the documents.

Impact on advertising effectiveness

Research published by Raptive on July 15, 2025, revealed significant consequences for brands advertising alongside content perceived as artificially created. The study commissioned by Raptive surveyed 3,000 U.S. adults and found that suspected AI-generated content reduces reader trust by nearly 50%.

The research documented a 14% decline in both purchase consideration and willingness to pay a premium for products advertised alongside content perceived as AI-made. This skepticism also hits brands directly, with findings demonstrating measurable impacts across multiple content verticals including travel, food, finance, and parenting topics with paired brand advertisements.

Integral Ad Science released its 2026 Industry Pulse Report on December 8, showing industry sentiment toward AI-generated content reflects both enthusiasm and concern. The research surveyed 290 U.S. digital media experts in October 2025 and found that 61% of respondents expressed excitement about AI developments in digital media and opportunities to advertise alongside AI-generated content. However, 53% simultaneously cited ad adjacency to content generated by AI as a top media challenge for 2026.

Categories of problematic AI content

The research identified specific categories of AI-generated content that media experts would avoid. Content containing inaccurate information or hallucinations ranked highest at 59% avoidance, followed by content providing ad-spammy or cluttered user experiences at 56%.

Fifty-two percent would avoid content from unknown or recently registered domains with no verifiable editorial team, while 51% expressed concern about content attracting non-human bot traffic. Thirty-six percent of respondents indicated they are cautious about advertising within AI-generated content and will take extra precautions before doing so.

Forty-six percent cited that increasing levels of AI-generated content not suitable for brands and ad adjacency to this content represents a serious threat to media quality. For performance marketing professionals, AI slop represents a dual challenge: brand safety concerns arise when advertisements appear alongside bizarre or misleading AI-generated content, while simultaneously, the algorithmic promotion of engaging AI content can inflate performance metrics.

Programmatic advertising implications

Integral Ad Science published comprehensive analysis on July 17, 2025, identifying AI-generated "slop sites" as a critical threat to digital advertising effectiveness. The company classifies these problematic websites as "ad clutter" due to aggressive monetization strategies and artificially generated content designed primarily to capture advertising revenue rather than provide genuine user value.

Platform-level challenges complicate ad clutter prevention efforts. Analysis of leading demand-side platform blocklists revealed that over 90% of known AI-generated sites remained unlisted, indicating significant gaps in current prevention methodologies. This limitation necessitates dynamic detection systems capable of identifying new ad clutter operations in real-time.

While Made-For-Advertising sites may appear successful based on metrics like clicks and viewability, DoubleVerify's research released on June 14, 2024, indicates a different reality. The report found that MFA sites deliver significantly lower attention levels compared to other media sources. For display ads, attention on MFA sites is 7% lower overall, and 28% lower for video ads.

Free weekly newsletter

Your go-to source for digital marketing news.

No spam. Unsubscribe anytime.

Detection challenges

Content moderation systems designed to identify spam and low-quality material often fail to catch AI-generated content that successfully engages audiences. The content may be bizarre or misleading, but if it generates legitimate views, likes, and shares, it satisfies most platform quality metrics.

Some platforms have implemented AI content labeling requirements, but these policies typically apply only to realistic content that could be mistaken for authentic media. Clearly artificial content like animated vegetables or impossible scenarios often escapes labeling requirements despite being AI-generated.

Current AI detection systems face accuracy limitations, while consumer perception often relies on subjective quality assessments rather than technical verification. Recent advances in content credentials technology show promise for maintaining provenance metadata, though widespread adoption remains limited.

Industry response

Raptive implemented comprehensive countermeasures against ad clutter by banning AI slop content across its publisher network in 2023. The company subsequently rejected thousands of creators and removed dozens of sites that adopted AI-generated content strategies, demonstrating industry recognition of quality concerns.

Eric Hochberger, CEO of Mediavine, addressed AI challenges during a comprehensive Ask Me Anything session on Reddit on September 17, 2025. Mediavine continues enforcing strict policies against what Hochberger termed "AI Slop" content. The company utilizes third-party content quality tools alongside human reviews from its Marketplace Quality team to evaluate publisher content.

The company explicitly prohibits monetizing low-quality, mass-produced, unedited or undisclosed AI content scraped from other websites. Publishers using AI to create untested recipes or low-quality content that devalues legitimate creator contributions face account termination. However, Hochberger emphasized that responsible AI usage remains acceptable.

Platform executive perspectives

Microsoft CEO Satya Nadella launched a personal blog on December 29, 2025, calling for the industry to "get beyond the arguments of slop vs sophistication" just days after admitting to managers that his company's flagship AI product "doesn't really work" and is "not smart."

"We need to get beyond the arguments of slop vs sophistication and develop a new equilibrium in terms of our 'theory of the mind' that accounts for humans being equipped with these new cognitive amplifier tools as we relate to each other," Nadella wrote in the blog post. This philosophical framing conveniently sidesteps technical execution failures.

YouTube CEO Neal Mohan has characterized generative AI as the biggest game-changer for the platform since the original revelation that ordinary people wanted to watch each other's videos. "The genius is going to lie whether you did it in a way that was profoundly original or creative," Mohan stated. "Just because the content is 75 percent AI generated doesn't make it any better or worse than a video that's 5 percent AI generated. What's important is that it was done by a human being."

Distinction from legitimate AI use

The distinction between AI slop and legitimate AI-assisted content centers on intent, oversight, and quality control. Publishers should write original content and use AI for assistance rather than copying AI-generated prompts directly into content management systems.

Every successful publisher will use AI to assist them in the future, according to industry experts. The key differentiator lies in whether AI serves as a tool to enhance human creativity and productivity, or whether it replaces human judgment entirely in pursuit of volume-based monetization.

High-quality AI applications in advertising involve strategic inputs representing the primary success levers. Clean, segmented, and integrated customer data provides the business intelligence that AI systems need to understand prospective customer value. Brand-forward creative assets require marketers to function as narrative architects, creating diverse excellent text, image, and video assets that enable AI systems to assemble optimal combinations.

Future outlook

As artificial intelligence technologies continue to scale and integrate into content creation workflows, the prevalence of AI slop is expected to persist. Industry observers note an inevitable drift toward a future saturated with such low-quality outputs unless advancements in model sophistication or content detection tools intervene.

The term "AI slop" may evolve alongside linguistic adaptations or embed itself in mainstream dialogues on regulating AI-driven content, as evidenced by its designation as a word of the year reflecting broader cultural anxieties over digital authenticity. Looking ahead from 2025 trends, the concept's relevance is projected to endure for years as AI permeates everyday digital experiences.

Ad verification companies offer solutions designed to help advertisers identify and avoid MFA sites. These solutions leverage a combination of machine learning and human expertise to analyze website characteristics and traffic patterns. By filtering out suspicious websites, advertisers can reduce exposure to low-quality content and potentially improve campaign performance.

Subscribe PPC Land newsletter ✉️ for similar stories like this one

Timeline

- 2019: Early uses of term "slop" as descriptor for low-grade AI material appear in reaction to AI image generator releases

- 2023: Raptive implements AI Slop ban across publisher network

- May 2024: Simon Willison champions term "slop" in mainstream discourse via personal blog

- June 14, 2024: DoubleVerify releases Global Insights Report showing 19% increase in MFA impression volume

- Second quarter 2024: Term "slop" gains increased popularity in digital media discussions

- June 23, 2025: HBO's Last Week Tonight highlights platform monetization driving AI slop epidemic

- July 15, 2025: Raptive publishes comprehensive study showing AI content reduces reader trust by 50%

- July 17, 2025: Integral Ad Science identifies AI-generated slop sites as critical threat

- September 17, 2025: Mediavine CEO addresses AI challenges in Reddit AMA

- November 28, 2024: Kapwing publishes research showing 21% of YouTube content is AI slop

- December 8, 2025: Integral Ad Science releases Industry Pulse Report on AI advertising sentiment

- December 2025: Merriam-Webster selects "slop" as 2025 Word of the Year

- December 29, 2025: Microsoft CEO Satya Nadella blogs about AI slop, calling to move beyond quality debates

Subscribe PPC Land newsletter ✉️ for similar stories like this one

Summary

Who: AI slop is produced by content creators using artificial intelligence tools with minimal human oversight, typically individuals from developing countries exploiting platform monetization programs. The phenomenon affects advertisers, publishers, platform operators, and consumers who encounter this low-quality content across digital channels.

What: AI slop is low-quality, mass-produced content created by artificial intelligence systems with minimal human oversight or quality control. It lacks authenticity, contains factual errors, appears formulaic, and is designed primarily to generate advertising revenue rather than provide genuine value. The content manifests as generic blog posts, stock-like images, scripted videos, fake social media posts, and automated articles that flood digital platforms.

When: The term emerged from online communities in 2024, with early uses dating to 2022 following the release of AI image generators. British computer programmer Simon Willison championed the term in mainstream discourse through his personal blog in May 2024. The phenomenon gained widespread recognition throughout 2024 and 2025, culminating in Merriam-Webster selecting "slop" as its 2025 Word of the Year in December 2025.

Where: AI slop proliferates across major digital platforms including YouTube, TikTok, Facebook, Instagram, and X (formerly Twitter). It floods social media feeds, search engine results, online publishing platforms, e-commerce sites, and programmatic advertising inventory. EMarketer forecasts that as much as 90% of web content may be AI-generated by 2026.

Why: Economic incentives drive AI slop production, with platform monetization programs creating direct financial rewards based on engagement metrics. Top AI slop channels generate estimated annual revenues between $4 million and $4.25 million through advertising income alone. The ease of generating content with AI tools enables creators to produce hundreds of videos or articles with minimal time investment, making mass production economically attractive despite questionable quality or authenticity.